Title of the Project:.

- Indian Sign Language to Spoken Language Translator using data from Wearable Multi-sensor Armbands

Principal Investigator: Dr. Rinki Gupta

Co-Principal Investigator: None

Funding Agency: SCIENCE & ENGINEERING RESEARCH BOARD (SERB), DST

Funding Scheme: Early Career Research Award

Project value: Rs. 25,81,350

Total Duration: 30 months

Status: Ongoing (Start date 13.2.2017)

|

|

Project Summary:

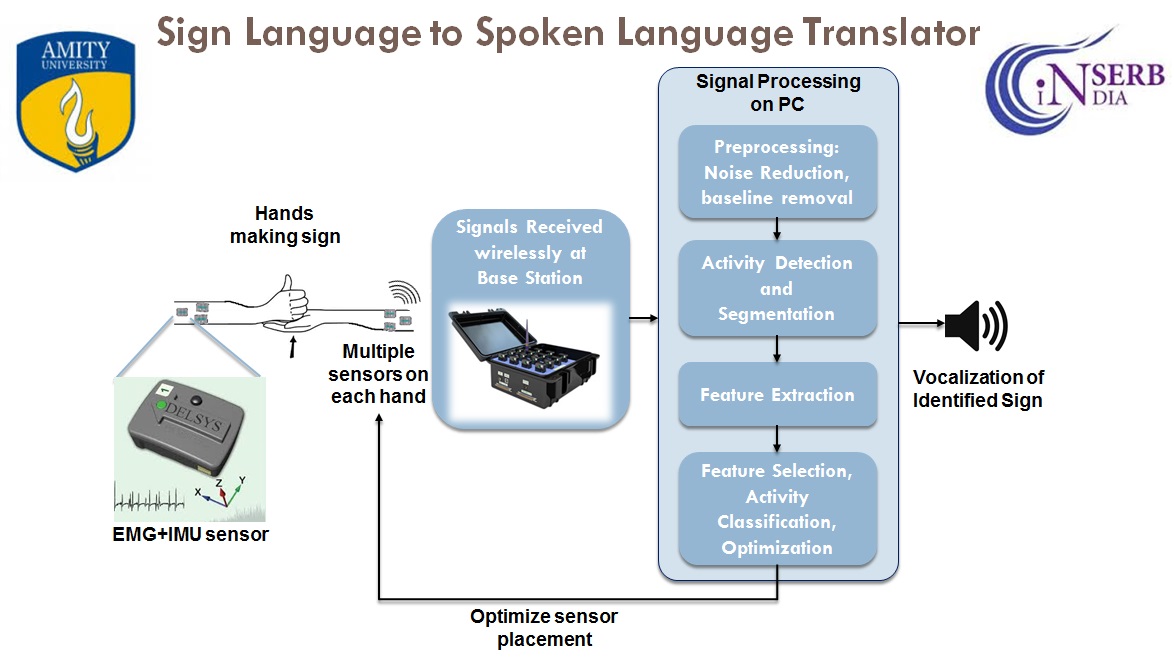

Sign language is the first language of a deaf and mute person. A sign language is based on the use of specific gestures, orientations and motions of hands as well as facial expression, lip movement and body postures to convey information in a structured manner, just like in any spoken language. However, a sign language user often experiences a language barrier when conversing with a non-signer. The use of written medium or a human interpreter is not always convenient and possible. In India, there are only about 250 sign language interpreters for as many as 18 million deaf Indians. The objective of this project is to work towards developing an Indian sign language to speech translator to aid a sign language user to convey ideas to or seek easily understandable information from a non-signer in a natural manner. Such a system will also be useful to a mute person or a person with speaking disability to interact over telephone. The proposed system will consist of multiple electromyography (EMG) and motion sensors placed in armbands that can we worn by the user on his forearms. The measurements of the EMG and motion sensors corresponding to hand movements during different signs will be recorded to form a database for several commonly used signs in the Indian Sign Language. The data of multiple sensors from dual armbands will be processed in an integrated manner to enable recognition of isolated signs from the Indian Sign language. The recognized sign will be vocalized in English in male or female voice.

|

List of Publications

- Karush Suri, Rinki Gupta, “Classification of Hand Gestures from Wearable IMUs using Deep Neural Network”, IEEE 2nd International Conference on Inventive Communication and Computational Technologies (ICICCT 2018), 20-21 Apr 2018, pp. 1-6, accepted for publication in IEEE Xplore.

- Anamika, Rinki Gupta, “Correlation based Binary Evolution Algorithm for Feature Selection in Hand Activity Classification”, IEEE 5th International Conference on Computing for Sustainable Global Development (INDIACom-2018), 14-16 Mar 2018, pp. 1-5, presented and will be published in IEEE Xplore.

- Karush Suri, Rinki Gupta, “Dual Stage Classification of Hand Gestures using Surface Electromyogram”, IEEE 5th International Conference on Signal Processing and Integrated Networks (SPIN-2018), 22-23 Feb 2018, pp. 1-5, presented and will be published in IEEE Xplore.

- Rinki Gupta, Karush Suri, “Activity Detection from Wearable Electromyogram Sensors using Hidden Markov Model”, IEEE 2nd International Conference on Computing Methodologies and Communication (ICCMC 2018), 15-16 Feb 2018, pp. 1-5, presented and will be published in IEEE Xplore.

- Rinki Gupta, S. Saxena, A. Sazid, “Channel selection in multi-channel surface electromyogram based hand activity classifier”, IEEE 4th International Conference on Computational intelligence and communication technology (CICT-2018), pp. 1-5, presented and will be published in IEEE Xplore.

- Rinki Gupta, “Appropriate AR modeling for Surface Electromyogram Signals and its application in Hand Activity Classification”, IEEE 1st International Conference on Computing and Communication Technologies for Smart Nation (IC3TSN 2017), 12-14 October 2017, pp.1-5, published in IEEE Xplore, DOI: 10.1109/IC3TSN.2017.8284491.

|