Infrastructure

Specifications

- Hardware Specifications

- Software Specifications

- Tools

- Additional Resources

The NVIDIA A100 GPU server is a high-performance computing platform designed for artificial intelligence (AI), deep learning, and scientific computing workloads. Here are the specifications for the NVIDIA A100 GPU server:

-

CPU

- Dual AMD EPYC 7003 series processors with up to 64 cores per processor

- Support for PCIe Gen4

- Support for up to 4TB of DDR4 memory

-

GPU

- NVIDIA A100 Tensor Core GPU with 6,912 CUDA cores and 40GB or 80GB of high-bandwidth memory (HBM2)

- Up to 19.5 teraflops of single-precision (FP32) performance or 156 teraflops of mixed-precision (FP16/FP32) performance

- Support for NVIDIA NVLink and PCIe Gen4 for GPU-to-GPU communication

- Support for NVIDIA Tensor Cores for accelerated AI training and inference

- Support for NVIDIA Multi-Instance GPU (MIG) technology, which allows multiple users to share a single GPU

-

Storage

- Support for up to 12 NVMe SSDs

- Support for up to 8 hot-swappable SAS/SATA drives

-

Networking

- Dual 25GbE or 100GbE Ethernet ports

- Support for Mellanox ConnectX-6 network adapters

The NVIDIA A100 GPU server supports a wide range of software tools and programming languages, including Python. Here are some of the software specifications related to programming and Python

-

Operating system

- The NVIDIA A100 GPU server supports various operating systems, including Linux (Red Hat, CentOS, SUSE, Ubuntu, and others) and VMware ESXi.

-

CUDA

- The NVIDIA CUDA Toolkit is a programming environment for developing GPU-accelerated applications. The NVIDIA A100 GPU server supports the latest version of the CUDA Toolkit (11.4 as of the knowledge cutoff date), which includes the CUDA C++ programming language and various libraries for machine learning, signal processing, and scientific computing.

-

Python

- The NVIDIA A100 GPU server supports Python, which is a popular high-level programming language for scientific computing and machine learning. The server can be used with various Python libraries for machine learning, including TensorFlow, PyTorch, and MXNet.

- The NVIDIA Deep Learning Container (DLC) provides pre-configured Docker containers with popular deep learning frameworks, including TensorFlow, PyTorch, and MXNet. These containers include optimized versions of the libraries and can be used to run deep learning workloads on the NVIDIA A100 GPU server.

-

Other features

- BMC with IPMI support for remote management

- Access to Nvidia NGC Catalog: NVIDIA NGC is the hub for GPU-optimized software for deep learning, machine learning, and HPC that provides containers, models, model scripts, and industry solutions so data scientists, developers and researchers can focus on building solutions and gathering insights faster.

- Nvidia AI Enterprise Suite: The software layer of the NVIDIA AI platform, NVIDIA AI Enterprise, accelerates the data science pipeline and streamlines the development and deployment of production AI including generative AI, computer vision, speech AI and more. With over 50 frameworks, pre-trained models, and development tools, NVIDIA AI Enterprise is designed to accelerate enterprises to the leading edge of AI while simplifying AI to make it accessible to every enterprise.

Overall, the NVIDIA A100 GPU server is a powerful and versatile platform for high-performance computing workloads, particularly those involving AI and deep learning.

-

Software tools

- Many GPU-accelerated libraries are available i.e., CuPy, CuDF, NVIDIA DALI, NVIDIA cuDNN, and NVIDIA TensorRT

- The NVIDIA A100 GPU server supports various other software tools commonly used in HPC and AI workloads, including OpenMPI

-

Containerization Tool:

- Docker: Docker is an open-source platform that enables developers to build, deploy, run, update, and manage containers—standardized, executable components that combine application source code with the operating system (OS) libraries and dependencies required to run that code in any environment.

-

Cluster Management Tool:

- Kubernetes: Kubernetes automates operational tasks of container management and includes built-in commands for deploying applications, rolling out changes to your applications, scaling your applications up and down to fit changing needs, monitoring your applications, and more-making it easier to manage applications.

-

GPU monitoring tool:

- Grafana: It is an open-source analytics and interactive visualization web application. It allows you to ingest data from a huge number of data sources, query this data, and display it on beautiful customizable charts for easy analysis.

- Prometheus: It is an open-source technology designed to provide monitoring and alerting functionality for cloud-native environments, including Kubernetes. It can collect and store metrics as time-series data, recording information with a timestamp.

-

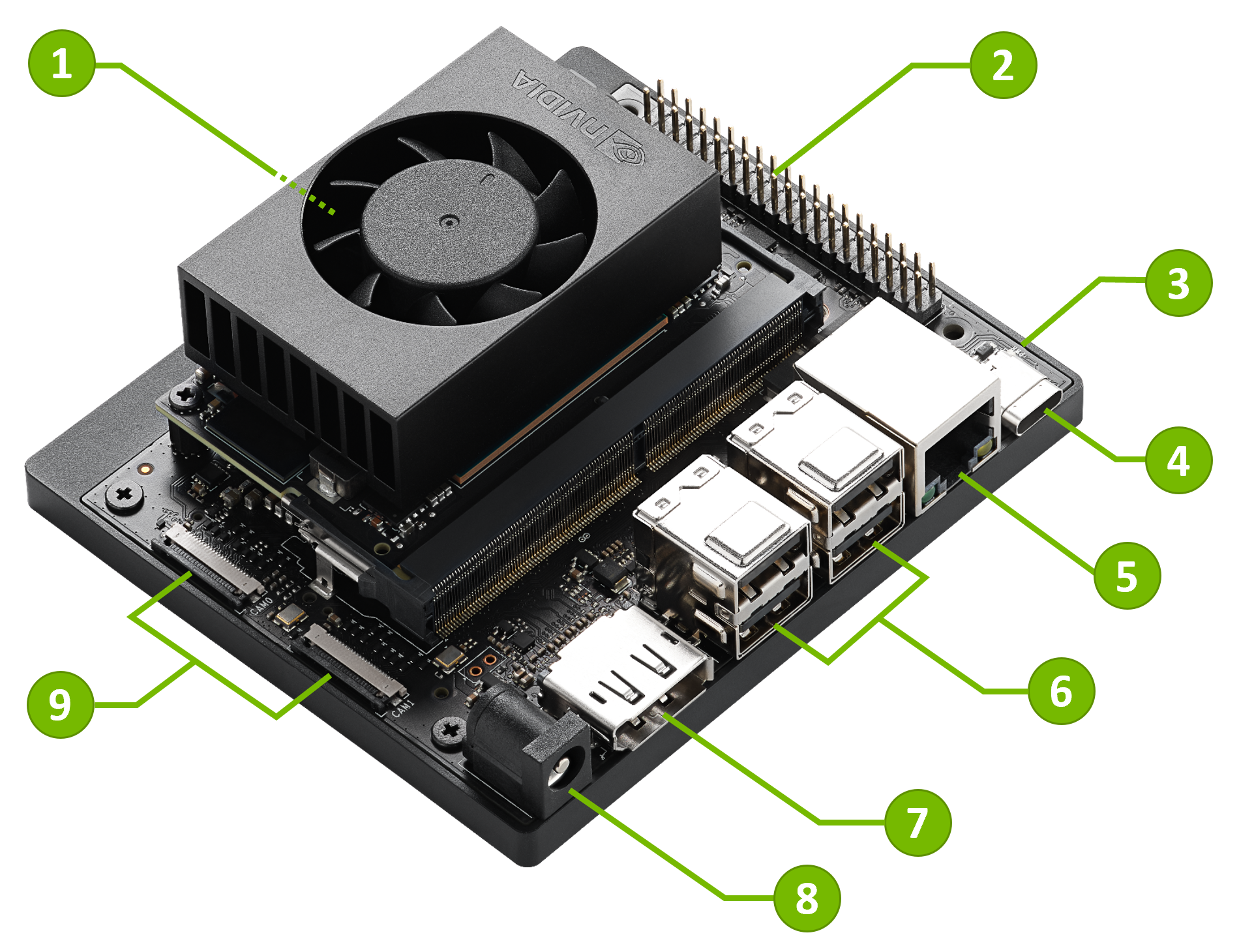

NVIDIA Jetson Nano Module:

- The Jetson Nano is a small, low-cost, single-board computer developed by NVIDIA for AI and robotics applications.

- It is specifically designed for tasks related to artificial intelligence (AI) and robotics

- The Jetson Nano features a 128-core Maxwell GPU, which provides hardware acceleration for AI and deep learning workloads, significantly improving performance.

- It is designed to be cost-effective, making it accessible to hobbyists, students, and developers interested in AI and robotics.

-

Jetson Nano Module Key Features:

- 1x Gbe, 4x Usb 3.0, 1x 4kp60 Hdmi Outputs, 1x Dp.

- Stacked Outputs.

- 2x2 Lane Mipi Csi-2.

- 1x4 Lane Mipi Csi-2.

- 40-pin Expansion Header.

- 12-pin Button Header.

- 1x Micro-SD Card Slot.

- Operating Temperature: 0oC ~ 65oC